The Pectra Fork: Ethereum’s Scaling Hail Mary

Ethereum has come a long way since its early days. Now, the Pectra fork promises Ethereum's most substantial throughput upgrade in four years, with technical improvements that could redefine the relationship between L1s and L2s, and challenge the rollup-centric scaling philosophy.

Back in 2013, four years after the launch of Bitcoin, a 19-year-old college dropout authored a whitepaper that would redefine blockchain technology forever.

Frustrated by Bitcoin's limited scope as merely a peer-to-peer cash system, the University of Waterloo dropout introduced a groundbreaking concept in his whitepaper, 'Ethereum: A Next-Generation Smart Contract and Decentralized Application Platform.' He proposed a blockchain capable of executing programmable agreements, envisioning a network far more versatile than Bitcoin’s initial design. His idea was an open platform that could do more than just transfer digital coins between addresses—it was a foundation for decentralized applications, governed by 'smart contracts'.

That college dropout was Vitalik Buterin, and shortly after the release of the white paper, along with Gavin Wood, Charles Hoskinson, Anthony Di Iorio, and Joseph Lubin, his vision ultimately led to the creation and development of Ethereum in 2015.

In the following years, Ethereum experienced significant growth, both in its user base and technological advancements… Even the platform's native currency, Ether (ETH), saw its value rise from under $1 in 2015 to over $10 by March 2016.

As the network expanded, the Ethereum community realized they needed a reliable and transparent way to propose and implement changes to the network. This realization led to the creation of the Ethereum Improvement Proposals (EIPs).

Modeled after Bitcoin's BIP process and Python's PEP system, EIPs became the “language of progress” for Ethereum. It allowed developers around the globe to easily collaborate on innovation.

The first of these proposals, EIP-1 was a manifesto, created in 2015, and it established the framework for proposing and implementing changes within the network.

As Hudson Jameson, a core developer liaison at the Ethereum Foundation, put it: “EIP-1 will be the constitution of this new nation… Not etched in stone, but written in collaborative code.”

Shortly after the first EIP, came one of the most important EIPs in the network's history, EIP-20.

This proposal introduced the ERC-20 token standard that we all know and love. It enabled developers to create interoperable tokens on Ethereum overnight. And suddenly, blockchain wasn’t just about money — it became a playground for decentralized finance (DeFi), digital art (NFTs), and DAOs. Even though the EIP-20 unlocked Ethereum's creative potential, the network faced its first existential crisis just months later.

In June 2016, an attacker exploited a vulnerability in "The DAO", a $150 million decentralized venture fund built on Ethereum−draining 3.6 million ETH (worth $60M at the time). The community faced an impossible choice: preserve the "code is law" ethos and let the theft stand, or override the blockchain's history to recover the funds.

This sparked Ethereum's first hard fork in July of 2016. Through heated debates on Reddit and developer forums, 85% of miners voted to implement EIP-779, reversing the hack by invalidating the attacker's transactions. The minority who rejected this change continued operating the original chain as Ethereum Classic (ETC), creating a permanent chain split.

What made this intervention possible was a unique circumstance: The DAO's code had a 30-day delay before stolen funds could be withdrawn, giving developers a critical window to act. The Ethereum ecosystem was also much smaller and less interconnected back then. When Bybit exchange lost $1.4B in ETH to hackers in February of 2025, rollback calls predictably surfaced again, but the landscape had fundamentally changed. With DeFi protocols, NFT markets, and cross-chain bridges now deeply intertwined, hackers could instantly scatter stolen funds across the ecosystem, making a surgical intervention impossible without massive collateral damage.

The Hard Fork Meta

Definition:

- The “difficulty bomb” is a mechanism inside Ethereum’s PoW designed to make mining progressively harder as time passed to force miners to eventually switch to PoS.

- zk-SNARKs are an early form of zero-knowledge cryptography that enable privacy-enhancing transactions.

- In the context of Ethereum upgrades, a "fork" is a protocol change that creates a divergence from the previous blockchain version, with hard forks requiring all nodes to update software to maintain network participation while soft forks remain backward-compatible. Named upgrade forks (like "Shanghai" or "Dencun") represent scheduled implementations of multiple EIPs that collectively advance Ethereum's capabilities.

Even though this unfortunate event created a philosophical split between the members of the community, the Ethereum network gained something undeniably more important, “the hard fork meta”.

In nature, genetic mutations sometimes create new species - Blockchains do this through forks.

Back in 2016 when miners split Ethereum into two chains — one where the DAO hack was reversed (ETH) and one where it wasn’t (ETC) — they revealed something profound: Forks are blockchain’s mutation mechanism. Forks allow networks to adapt when their environment changes, and throughout its lifetime, Ethereum has had quite a few of these mutations ever since.

Following the DAO Fork, Ethereum’s next major “mutation” came with the Byzantium upgrade.Byzantium was part of the broader Metropolis roadmap and it introduced significant improvements like zk-SNARKs and paved the way for the transition to Proof of Stake (PoS) by reducing block rewards and delaying the “difficulty bomb”.

After the Byzantium update, Ethereum introduced the Constantinople in 2019, reducing gas costs for certain operations and further postponed the difficulty bomb, signaling that Ethereum was gearing up for a significant transformation.

By 2021 Ethereum had already grown into a multi-billion-dollar ecosystem, but one major issue still remained - gas fees. Ethereum‘s transaction fee model was unpredictable and often led to users overpaying for transactions.

Enter EIP-1559, the most famous proposal of the London hard fork where it introduced a base fee burn mechanism, permanently removing a portion of ETH from circulation with every transaction. Suddenly, Ethereum had a theoretic deflationary pressure whenever network activity surged, eyeing a supply reduction over time..

This wasn’t just a technical upgrade, it changed Ethereum’s economic model forever. ETH was no longer just an inflationary asset, now, at times of high demand, it could become “ultrasound money”.

But all these forks were just stepping stones to Ethereum’s most ambitious transformation yet: The Merge. For years, Ethereum had planned to transition from Proof-of-Work (PoW) to Proof-of-Stake (PoS) but the process was long and complex.

On September 15th, 2022, after many years of deep research, testing, and delays, Ethereum finally merged its Execution Layer (EL) with the Beacon Chain, a PoS network running in parallel since 2020. This upgrade recuded Ethereum's energy consumption by 99.95% overnight by altering its consensus mechanism.

Ethereum transitioned from relying on miners competing to secure the network to a capital-based security model, where validators staked ETH to participate in consensus. This shift marked a significant evolution in the hard fork methodology, moving beyond mere bug fixes and optimizations. It had now become a means to redefine the very essence of Ethereum.

Ethereum’s Biggest Hard Fork: Pectra

Definition:

- Precompiles in Ethereum are optimized, built-in smart contract functions that handle computationally expensive operations more efficiently than executing them in Solidity or the EVM’s general execution layer. Instead of using standard EVM opcodes, which can be inefficient and gas-intensive, precompiles provide direct access to optimized implementations written in low-level code.

- Opcodes in Ethereum are low-level machine instructions executed by the Ethereum Virtual Machine (EVM). They define the fundamental operations of smart contracts, such as arithmetic (+, -), storage access, and contract calls. Each opcode has a specific gas cost and operates on the EVM stack.

Pectra, named after a blend of Prague and Electra, is the next major upgrade scheduled for its initial deployment in May 2025. Defined under EIP-7702, due to the sheer number of EIPs included, developers were compelled to split the upgrade into two parts.

Unlike previous hardforks that typically focused on a few major changes, the first deployment of the two-part Pectra upgrade includes 11 EIPs that address a wide range of enhancements, from blob optimizations to staking improvements and user experience upgrades. While the total number of EIPs in the full Pectra upgrade (including the second deployment) is still undetermined, we can explore the EIPs included in the first deployment:

EIP-2537 - Precompile for BLS12-381 curve operations:

Defined as: \( y^2 = x^3 + 4 \), the BLS12-381 curve is an elliptic mathematical curve used for pairing-based operations in cryptography.

To put it simply, the BLS12-381 curve plays a key role in signature verification (BLS signature schemes and zk-SNARKs) used for Ethereum’s Proof-of-Stake.

However, as it currently stands, the Ethereum Virtual Machine (EVM) does not have precompiles for operations on the BLS12-381 curve, making any operations on the curve way more inefficient and expensive than they need to be.

Precompiles provide direct access to efficient low-level code — not having a precompile for such a core element of PoS is like doing taxes, every single day, WITHOUT a calculator! The addition of precompiles for the BLS curve will remove any unnecessary computational and gas overhead for its operations.

EIP-2935 - Save historical block hashes in state:

Currently, Ethereum’s BLOCKHASH opcode only allows retrieving block hashes from the last 256 blocks. If a smart contract needs an older block hash, it has to rely on external data sources (like off-chain oracles or archival nodes), which is inefficient and introduces trust dependencies.

By storing historical block hashes of the last 8192 blocks, EIP-2935 makes this data available on-chain, improving transparency, security, and usability for smart contracts.This also means:

- Improved Trustless Light Clients - Light clients rely on block headers to verify chain integrity. By making historical hashes accessible on-chain, light clients can operate more trustlessly without needing full nodes or third-party services.

- Improved Fraud Proofs - Many layer 2 rollups (especially optimistic rollups) use fraud proofs, which require referencing older block hashes for verification. Currently, rollups must depend on full nodes or external data providers to fetch past block hashes. Storing them on-chain removes this external dependency and improves rollup security.

EIP-6110 - Supply validator deposits on-chain:

Ethereum’s PoS system has a gap between its two main components:

- Execution Layer (EL) - Ethereum as we know it, where transactions and smart contracts run.

- Consensus Layer (CL) - Beacon Chain, which manages staking and validator consensus.

Currently, Ethereum’s EL doesn’t store validator deposits, instead, deposits are processed off-chain by the Consensus Layer, which means new validators must rely on external sources (such as full CL nodes or third-party APIs) to confirm their deposit status.

This creates inefficiencies:

- Slower Validator Bootstrapping – New validators must fetch deposit data externally, adding trust dependencies.

- Centralization Risks – Stakers often rely on third-party services to confirm deposits.

- Limited Smart Contract Access – Contracts and rollups can’t verify deposits natively, requiring external oracles.

EIP-6110 fixes these inefficiencies by storing validator deposits on-chain, making staking faster and trustless while reducing off-chain dependencies.

EIP-7002 - Execution layer triggerable exits:

Currently, validator exits are handled exclusively by the Consensus Layer, meaning validators must rely on their own nodes or a third-party service to submit exit requests.

This creates:

- Delays & Complexity – Validators must interact with the CL, which isn’t designed for easy user interaction.

- Trust Dependencies – Validators without direct access to a CL node must rely on external services (centralized APIs, staking providers) to submit exit requests.

EIP-7002 allows validators to trigger their own exits directly from the Execution Layer instead of relying solely on the Consensus Layer. This means a validator can submit an on-chain transaction to initiate their exit rather than waiting for an external Beacon node to process the request.

EIP-7251 - Increase the MAX_EFFECTIVE_BALANCE:

Today, the MAX_EFFECTIVE_BALANCE is fixed at 32 ETH, forcing every validator — even if ran by a single operator — to be limited to that amount.

This constraint leads to a high number of validators under one operator, i.e., to utilize as much of their ETH as possible, one operator is forced to run multiple validators because of the cap, making many of these single-operator-run validators:

- “Redundant” from the perspective of the operator

- And bad for the network, as more validators increase network overhead and complexity.

To solve these redundancy and complexity issues, EIP-7251 raises the cap on how much a validator’s effective balance can be, allowing a single validator to control more than 32 ETH (up to a new limit, e.g., 2048 ETH in this proposal).

It also introduces a new constant, the MIN_ACTIVATION_BALANCE, which remains at 32 ETH to ensure solo-stakers can still participate without consolidation.

The EIP will:

- Allow large node operators who currently must run many separate validators (each capped at 32 ETH) to consolidate their stake into fewer validators.

- (With less validators) Reduce the overall P2P communication overhead, lower the amount of data processed (such as fewer BLS signature aggregations), and decrease the BeaconState memory footprint.

- Benefit smaller stakers too—if they have, say, 40 ETH, they don’t have to wait until they accumulate 64 ETH to run two validators. They can run a single validator with a balance above the minimum.

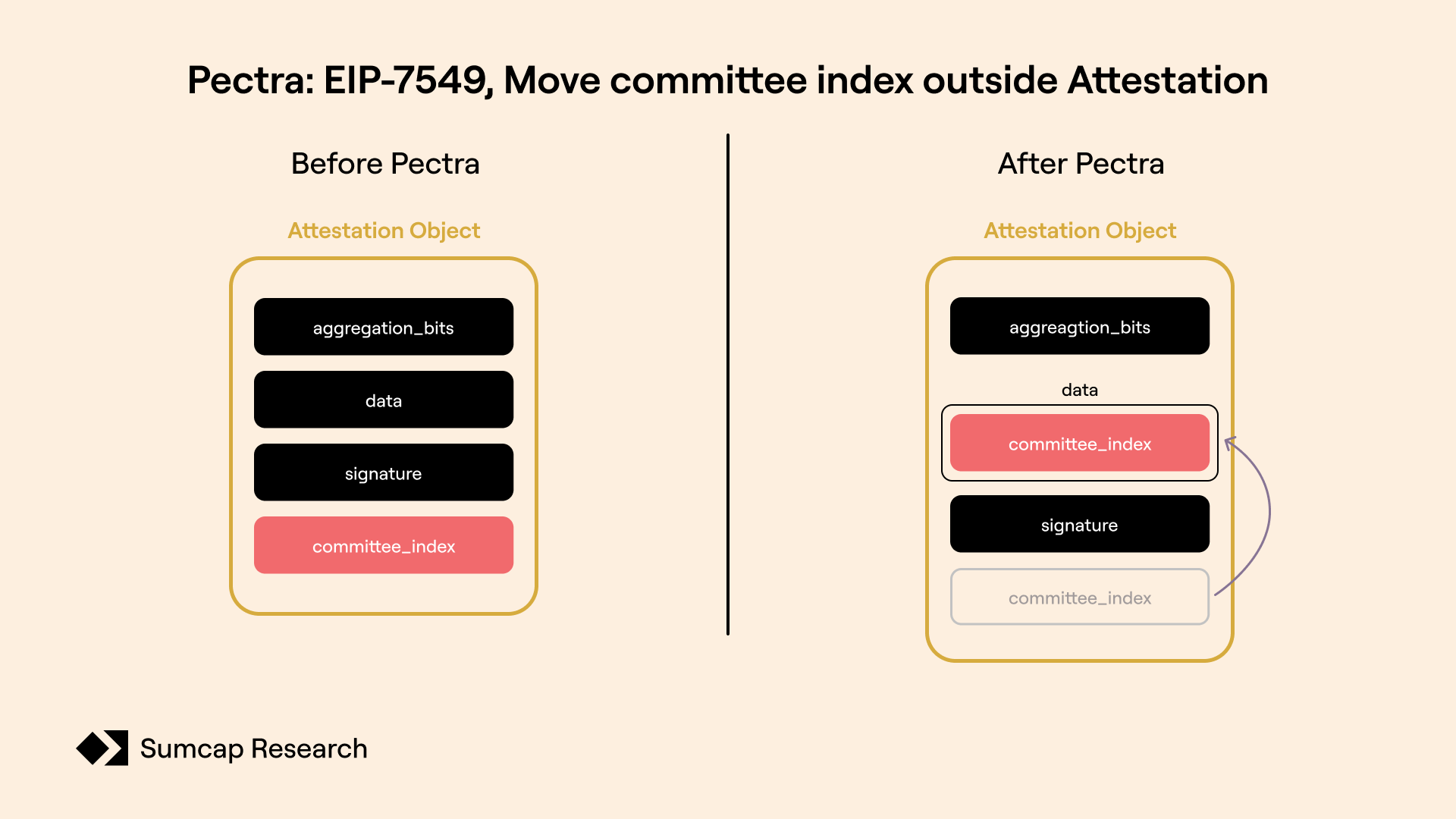

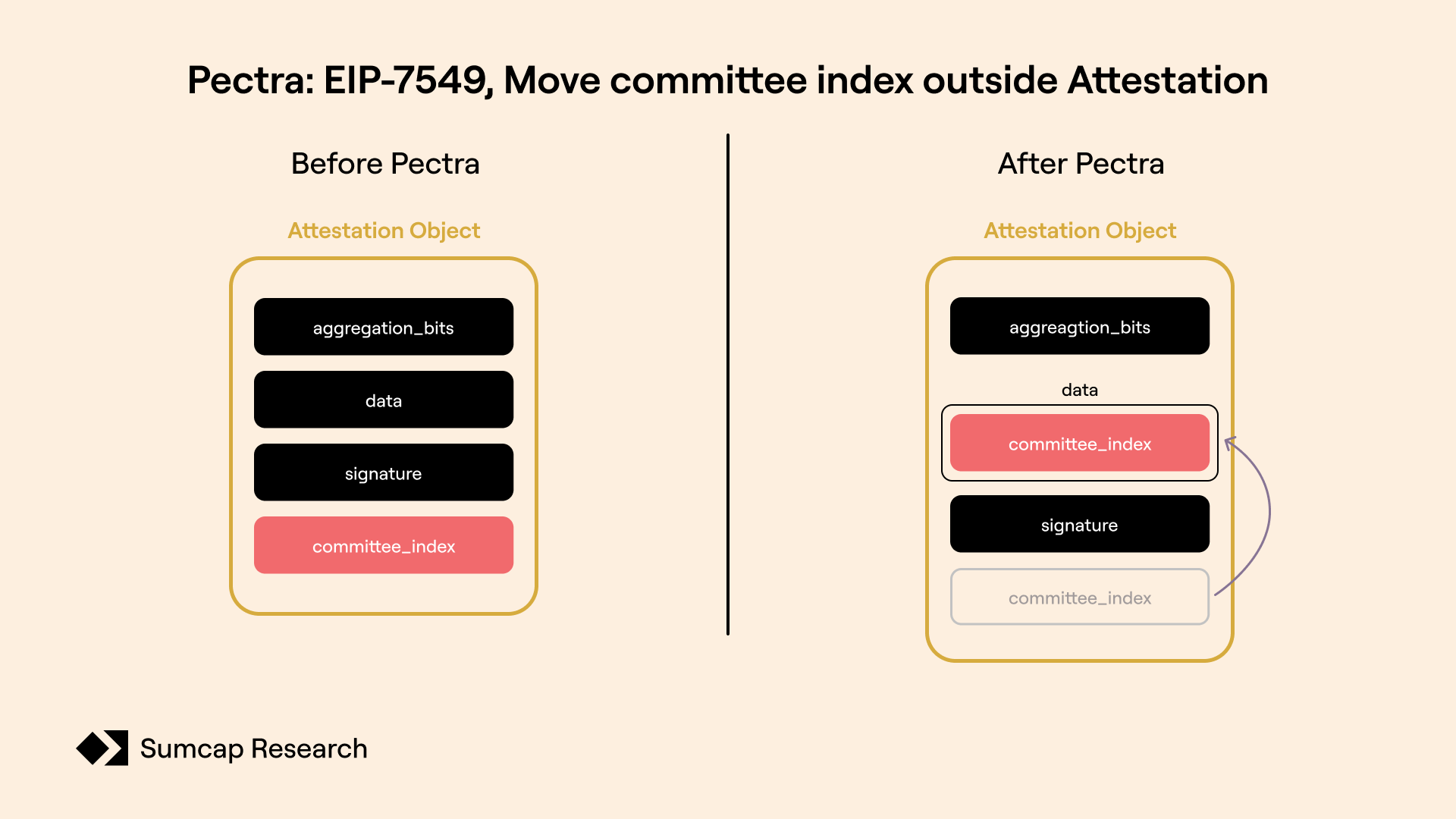

EIP-7549 - Move committee index outside Attestation:

Currently, Ethereum's attestation data structure has a logical inconsistency: the committee_index field (which identifies which validator committee the attestation belongs to) sits directly in the Attestation object alongside aggregation_bits, data (the AttestationData object), and signature, despite being a critical part of what validators are signing.

This creates complications:

- Aggregation Complexity – Attestations can only be aggregated if they have matching committee_index values, requiring extra verification steps.

- Unintuitive Structure – The field is part of what validators sign but isn't included in the signed data structure.

EIP-7549 moves the committee_index field into the AttestationData object where it logically belongs. This simple structural change:

- Simplifies Aggregation – Attestations can be aggregated if they have the same data (which now includes committee_index).

- Improves Data Organization – The field is now properly part of the signed data that validators attest to.

While the change is minor, it creates a cleaner, more consistent data structure that better aligns with how attestations are actually used in the protocol, making everything straightforward.

EIP-7623 - Increase calldata cost:

Currently, calldata costs are relatively low, which allows for larger block sizes when transactions include substantial amounts of calldata. This can lead to significant variability in block sizes, with potential maximums reaching up to 7.15 MB (average block sizes are around 100 KB).

EIP-7623 will increase the gas cost associated with calldata, particularly for transactions that predominantly post data which will:

- Reduce the maximum block size and,

- Block size variability, improving network efficiency and stability.

EIP-7685 - General purpose execution layer requests:

Right now, communication between Ethereum’s EL and CL is rigid and specialized:

- The EL is responsible for processing transactions and building blocks,

- While the CL handles validator consensus and finalization.

When the EL needs the CL to perform an action — like validator exits or withdrawals — it relies on separate predefined mechanisms for each type of request. These operations often involve dedicated transaction types, indirect signaling methods, or extra processing steps. This fragmentation makes the system more complex, harder to upgrade, and less flexible for future extensions.

EIP-7685 introduces a uniform format for EL-to-CL messages with each request including:

- A type prefix (a new field, REQUESTS_HASH, in the block header defining what kind of operation it is),

- and an associated payload

Instead of hardcoding separate mechanisms for different features (like validator exits, partial withdrawals, or consolidation), this EIP provides one unified system. It enables the EL to embed these requests in block bodies, which the CL later processes as part of its state transition.

This way similar requests can be aggregated and processed together reducing overhead and simplifying validation, which can lead to faster, more resource-efficient block processing.

.png)

EIP-7691 - Blob throughput increase:

As we all know, Ethereum’s scalability relies on Layer 2 (L2) solutions, like rollups, which compress and batch transactions before submitting them to the main chain.

These rollups depend on Ethereum’s data availability to store compressed transaction data efficiently and use blobs (specific data structures) to post large batches of transaction data more cost-effectively.

Currently, the protocol targets a relatively modest number of blobs per block (for example, a target of 3 and a maximum of 6). EIP-7691 will raise these limits (e.g., increasing the target to 6 and the maximum to 9 blobs per block).

With more blobs per block, the network can handle higher data loads, which reduces congestion and leads to smoother, more predictable performance even during periods of high demand.

Additionally, the improved data throughput enables rollups to operate more cost-effectively, which ultimately lowers transaction fees for users.

EIP-7702 - Set EOA account code:

We’ve all heard of the much-awaited Account Abstraction — “EOAs that can do more than just initiate transactions” — it’s a topic that we’ve already discussed in detail, but to put it simply, Ethereum has two distinct account types that cannot overlap:

- Externally Owned Accounts (EOAs) - Controlled by private keys but cannot contain code

- Contract Accounts - Contain executable code but have no associated private keys

This rigid division creates inefficiencies like:

- 🖥️ Limited Functionality – EOAs can only initiate basic transactions, lacking programmable features

- 🤔 Complex Architectures – Users needing programmability must deploy separate contract wallets or proxy contracts

- 📈 Higher Gas Costs – Deploying and managing these extra contracts consumes unnecessary gas

By introducing a new SETCODE opcode, allowing EOAs to set their own code while maintaining private key control, EIP-7702 marks the first real step towards full Account Abstraction on Ethereum Mainnet.

This change will blend the control of private keys with the programmability of smart contracts in a single account while reducing gas costs (no separate contract deployments needed), introducing cleaner security models, and preserving address identity for users.

EIP-7840 - Add blob schedule to EL config files:

Currently, whenever Ethereum needs to adjust blob-related parameters (like how many blobs can fit in a block), it requires a hard fork of the entire network.

This means all node operators must coordinate to update their software simultaneously, which is a slow, complex process.

It’s like needing city council approval every time you want to adjust the number of buses running on a route.

EIP-7840 proposes a much more efficient approach: adding a predefined blob schedule directly to Execution Layer configuration files. This means that instead of requiring separate hard forks for each adjustment, the network could automatically transition between different blob parameters at predetermined block numbers.

The proposal will:

- Remove the need for separate network upgrades just to adjust blob parameters

- Allow all ecosystem participants can see exactly when and how capacity will increase

- Allow gradual, planned scaling rather than large, disruptive jumps

The Other Half

Definition:

- Gas is Ethereum's unit of computational measurement—a way to quantify how much processing power, memory, and storage each operation requires on the network.

While the first deployment of Pectra is a step forward in Ethereum's capabilities, it's only half the story. The second deployment – scheduled to follow shortly after – includes an even more significant change, particularly around Ethereum's throughput capacity (through potential gas limit increases).

But before diving into the proposed changes, let’s first understand what gas is and how gas limits function in Ethereum.

What is Gas & How Does It Work?

Gas is the “metering system” that powers Ethereum's computational economy. Every operation on Ethereum—from simple ETH transfers to complex smart contract transactions—consumes a specific amount of gas: a simple ETH transfer costs 21,000 gas units, while a smart contract deployment can cost millions of gas units depending on code complexity, and token swaps typically cost between 100,000-200,000 gas units.

Each computational operation has a fixed gas cost assigned to it in the protocol. For example: adding two numbers costs 3 gas, storing a value costs 20,000 gas for the first time (because we’re initiating a new storage slot), while reading from storage only costs 200 units of gas.

Users pay for this gas in ETH at a rate determined by network demand - how much gwei (10^-9 ETH) per unit of gas. This model ensures that users pay proportionally for the network resources they use, which prevents spam and denial-of-service attacks.

While individual operations do have fixed costs, Ethereum still needs a way to limit the total computation in each block. This is where the gas limit comes in.

Ethereum's gas limit system is more comprehensive than it appears at first hand. What's commonly referred to as the "gas limit" (currently 15 million) is actually a target rather than a hard ceiling. Under EIP-1559, Ethereum implements a dual-limit system: the target gas limit that validators aim for, and a protocol-level hard cap set at twice this amount (currently 30 million).

The reason for such system is simple: it provides flexibility during periods of high demand.

Blocks can temporarily expand up to the hard cap, processing more transactions but with automatically increasing base fees that prevent sustained overloading. But, what makes this system truly adaptive is the validator adjustment mechanism. Each validator can propose a tiny change to the target gas limit (approximately ±0.1%, or more precisely ±1/1024) when they produce a block.

For the target gas limit to increase significantly, several conditions must align:

- 📈 Sustained High Demand: Network usage must remain high over extended periods, indicating genuine need rather than temporary spikes

- 🤝 Validator Consensus: A majority of validators must consistently vote to increase the limit across hundreds of blocks, weighing complex economic incentives;

Higher limits can increase their fee revenue by fitting more transactions per block, but larger blocks can:- Risk slower propagation — potentially leading to orphaned blocks and lost rewards

- Increase state growth and, in turn, hardware requirements, potentially excluding some validators

Even with strong consensus, meaningful increases take time—a 100% increase (from 15M to 30M) would require approximately 700 consecutive blocks with unanimous agreement to increase.

This conservative system is what ensures that gas limit increases only occur when there is both strong demand and validator agreement that the network can handle the additional load. It protects Ethereum from overextension while allowing the network to process more transactions in times of genuine need;

However, it also makes significant gas limit changes extremely rare without protocol-level intervention.

Now that we know how the gas system in Ethereum works, let’s get back to the topic at hand.

The Long History of Gas Limit Evolution

Throughout Ethereum's history, one of the most frequently adjusted parameters has been the gas limit. It has been increased multiple times with a careful balance between network capacity and decentralization.

In the beginning, Ethereum's gas limit was set at just 5,000 units per block. By July 2015, shortly after the network's launch, miners had already voted to increase this limit to 3 million gas.

"Gas limit increases are Ethereum's way of exhaling – making more room for the ecosystem to breathe without compromising its structural integrity," as Ethereum researcher Justin Drake put it.

Subsequent increases followed a pattern of planned expansion:

- 2016: The gas limit was raised to 4.7 million, enabling more complex smart contracts

- 2017 (Byzantium): An increase to 8 million gas followed by optimizations that reduced the cost of certain operations

- 2019 (Istanbul): Gas limits reached 10 million — a 100% increase from 2016

- 2020: In the midst of DeFi Summer, gas limits expanded to 12.5 million, alleviating congestion as dApps like Uniswap gained popularity

- 2021: Just before the London upgrade (EIP-1559), gas limits touched 15 million – a 3,000x increase from Ethereum's genesis blocks

Each of these increases came after careful considerations about state growth, node requirements, and network congestion weighed against the benefits of increased throughput. This pattern of regular adjustments established a rhythm to Ethereum's scaling—until it suddenly stopped.

In 2021, following the London hard fork, the gas limit reached 15 million and remained fixed at this level for the next four years.

What makes this stagnation particularly interesting is that those four years witnessed remarkable evolution in every other aspect of Ethereum. The Merge in September 2022 transformed the network from proof-of-work to proof-of-stake, reducing energy consumption by 99.95% and fundamentally changing Ethereum's security model. Client optimizations dramatically improved efficiency, and hardware capabilities continued to grow while costs declined.

Meanwhile, the validator ecosystem expanded dramatically. Validator count increased 5x, from 203,000 to over 1 million, with total staked ETH growing from 6.52M to nearly 34M.

This massive growth in network security and participation happens without a corresponding increase in the network's computational capacity (gas limit).

And finally, after four years of gas limits remaining untouched, Pectra's second deployment addresses this growing gap between the network's computational capacity and its other elements.

But the fundamental goal isn't simply to increase number—it's to enhance Ethereum's throughput capacity. As the ecosystem has grown exponentially in complexity and adoption, the throughput has remained the same, creating a noticeable bottleneck.

While historically gas limit increases have been the primary way for scaling throughput, Pectra's second deployment allows multiple paths to achieving this goal. Increasing the gas limit is just one approach to process more transactions per unit of time.

In fact, Ethereum can increase its throughput without even touching the gas limit at all. By adjusting other parameters like introducing shorter block times, the blockchain can process more transactions while maintaining the same gas limit per block. This distinction is crucial because different approaches to increasing throughput have different implications for validators, users, and applications.

What comes with a throughput increase?

Before diving into the specific approaches proposed in Pectra's second deployment, it's important to understand what increasing Ethereum's throughput actually means for the network and its participants. Any throughput increase, regardless of the implementation method, affects three fundamental aspects of the blockchain: storage requirements, bandwidth usage, and computational demands.

Storage Requirements

When considering throughput increases, storage has two distinct challenges: state growth and history growth. Both affect node operators, but in different ways and at different scales.

State Growth

Ethereum's state is the network's complete memory at any given moment - it's the record that every full node must maintain. This state is made up of all account balances, smart contract code, and storage values across the network. Looking at its composition, we see that ERC20 tokens occupy the largest portion at 27.2%, followed closely by ERC721 contracts (NFTs) at 21.6%, with user accounts representing 14.1% of the total state.

Ethereum's state is currently growing around 2.5 GB per month (30 GB per year), with some of the main contributors to this being (as per Paradigm’s analysis from 2024):

- ERC20 Contracts (the token standard)

- ERC721 Contracts (the NFT standard)

- EOAs

- DEX/DeFi

Historically, this state growth has been viewed as a potential issue for network scaling. At first glance, doubling throughput might suggest doubling this growth rate to 60 GB per year, raising concerns about storage costs for validators.

However, a closer look reveals that this concern is largely outdated. Storage hardware has advanced on an exponential curve, with SSD prices halving approximately every two years, while state growth follows a linear pattern. This hardware acceleration means that even with doubled throughput, the relative cost and burden of storage actually decreases over time.

Further diminishing this concern is the reality of hardware purchasing costs. Solo validators will soon need more than 2 TB of storage regardless of throughput changes. Since storage hardware is typically sold in powers of two (2TB, 4TB, 8TB), validators will already be investing in 4TB drives, creating more “room”, that could be utilized without additional cost — making it a good opportunity to increase throughput (as the storage infrastructure will already be in place).

Modern consumer hardware (4TB drives) could support current rates of state growth for many years without exhaustion. This means that state growth, while still a factor to consider, isn't the immediate bottleneck many once thought it to be.

History Growth

While state growth dominated scaling discussions in the past, recent analysis reveals that history growth – the accumulation of blocks and transactions – now presents a more significant bottleneck. History currently grows at approximately 19.3 GiB per month, which is 6-8x faster than state growth, with most of the growth coming from the Bridge/Rollup, and DEX/DeFi categories.

History data (blocks and transactions) now occupies 3x as much storage space as state. At current growth rates, the combined storage burden (state + history) for Ethereum nodes will reach a critical threshold of 1.8 TiB within 2-3 years. This would force many node operators running on standard 2TB drives (which provide only 1.8 TiB of usable space) to upgrade their hardware.

Any throughput increase would accelerate this timeline proportionally. This is an important consideration when thinking about different approaches of increasing throughput, as the impact on history growth could determine how quickly node operators need to upgrade their hardware (assuming they have 2TBs of storage).

It’s worth noting that the Ethereum community is already working to address this bottleneck. EIP-4444 proposes to limit history retention to approximately one year, allowing nodes to discard older history data. If implemented, this would cut node storage requirements roughly in half and prevent history growth from continuing indefinitely. While a full discussion of EIP-4444 is beyond the scope of this analysis, its existence demonstrates that there are already proposals in development that could alleviate the history growth bottleneck before it becomes critical.

Bandwidth Requirements

Unlike storage, bandwidth is a more immediate challenge for throughput scaling. Current Ethereum nodes need approximately 2 MB/s of bandwidth, with much of this devoted to consensus layer activities like blob gossiping and attestation aggregation.

The first deployment’s implementation of EIP-7691 (blob throughput increase), which we already discussed, will have significant implications for these bandwidth requirements even before any gas limit changes are considered. Currently, the protocol targets a modest number of blobs per block (a target of 3 and a maximum of 6). EIP-7691 will double the target to 6 blobs per block and increase the maximum to 9 blobs per block.

This change alone will substantially increase bandwidth requirements. Each blob is approximately 128 KB in size (or more precisely, 127.7 KB), meaning the current maximum of 6 blobs represents about 768 KB of additional data per block. Increasing this to 9 blobs would raise the maximum to 1.15 MB per block just for blob data alone. Considering that bandwidth is already a significant consideration at 2 MB/s, with a substantial portion dedicated to blob gossiping, this increase will push bandwidth requirements closer to 3 MB/s even before any gas limit changes.

When combined with potential gas limit increases, this presents a compounding effect on bandwidth requirements:

- ➕ Additive Bandwidth Pressure: If both blob capacity and gas limits increase, validator bandwidth requirements could potentially double from current levels of 2 MB/s

- 📊 Peak Bandwidth Concentration: During periods of high network activity, validators would need to handle both maximum-sized regular blocks and maximum blob counts

- 🗺️ Geographic Disparities: This increased bandwidth requirement would further disadvantage validators in regions with limited internet infrastructure

Throughput increases through gas limit changes would affect bandwidth in several interconnected ways:

- 📈 Block Size Implications: Larger or more frequent blocks mean more data must be propagated across the network

- 📊 Peak vs. Average Demands: Different throughput approaches can affect whether bandwidth increases are evenly distributed or concentrated in peaks

- ⏳ Propagation Delays: Larger blocks take longer to propagate, potentially increasing orphan rates and affecting consensus stability

Current average block sizes post-Dencun hovers around 75 KB, with historical maximums reaching 270 KB. Doubling throughput via gas limit increases could potentially double these figures. In worst-case scenarios with full blocks and maximum blobs, validators would need to handle up to 3.4 MB for regular block data plus 1.15 MB for blob data, requiring a 100%+ increase in peak bandwidth capacity from today's levels.

This bandwidth consideration explains why the method of increasing throughput matters significantly. Implementations that distribute bandwidth usage more evenly could prove more accessible than those that increase peak requirements. For example, distributing the same amount of data across more frequent, smaller blocks creates a more consistent bandwidth usage pattern than concentrating it in larger, less frequent blocks.

The bandwidth implications of EIP-7691 combined with gas limit increases show the importance of carefully picking the timing and approach to throughput scaling — more on this later.

Computational Demands

Computation is the least concerning constraint for throughput increases. Block processing typically takes less than one second even on simpler hardware, leaving plenty of room for growth.

While higher throughput does increase computational demands, modern hardware can easily handle these increases.

One specific concern involves the MODEXP operation, which performs cryptographic calculations. This operation computes values using a formula ($\text{base}^{\text{exponent}}$) mod modulus. What makes MODEXP tricky is that when you feed it larger numbers, it needs disproportionately more computing power. While doubling the input size roughly doubles the processing time for simpler operations.

Fortunately, ongoing improvements to client software and hardware offset these increased demands. Even if we doubled Ethereum's throughput, the computational requirements would still be manageable for most validators.

In normal conditions, processing a block takes less than 1 second, even on older or less powerful machines. While special cryptographic operations like MODEXP could potentially cause slowdowns in certain scenarios, they don't represent a serious obstacle to increasing throughput.

Credit to Paradigm’s and Rebuffo’s researches for these insights. You can find their direct insights here:

https://www.paradigm.xyz/2024/03/how-to-raise-the-gas-limit-1

https://www.paradigm.xyz/2024/05/how-to-raise-the-gas-limit-2

https://giulioswamp.substack.com/p/are-we-finally-ready-for-a-gas-limit

Now that we’ve gone over the hardware implications that come with increasing Ethereum’s throughput let’s examine the two distinct approaches that could be included in Pectra's second deployment:

EIP-7782: The Time-Based Approach

EIP-7782 takes a unique approach; Rather than adjusting the gas limit to increase throughput, it proposes reducing Ethereum’s slot time down from 12s to just 8s — By decreasing the slot time 33% the network would be able to process blocks 33% faster, effectively increasing throughput.

But what’s the rational behind this approach? Why not just increase the gas limit?

Well, as we’ve already disscued the most pressing hardware issue that comes with an increase in throughput isn’t storage or computation, but rather bandwidth.

Most of you already know this, but bandwidth refers to the maximum amount of data that can be transferred over a network or communication channel in a given time. By decreasing the slot time instead of increasing the gas limit, EIP-7782 distributes the same amount of data over more frequent, smaller blocks rather than cramming more data into the same number of blocks.

This difference is very important for network health.

When block sizes increase (as would happen with a gas limit increase), the peak bandwidth requirements spike accordingly. These spikes can overwhelm validators with limited connectivity, potentially leading to missed attestations, increased block orphan rates, and network centralization. In contrast, maintaining current block sizes but increasing their frequency creates a more consistent, predictable bandwidth usage pattern.

Think of it like this - it's much easier for Amazon to send 10 small packages at regular intervals than to ship one massive package weighing 10 tons all at once. The total contents delivered are the same, but the logistics are more manageable with the smaller, frequent shipments.

The time-based approach also brings a crucial distinction in how throughput increases are delivered. Unlike gas limit increases which only allow more transactions per block, reducing slot time provides a dual benefit: more total transactions AND faster confirmations.

This latency improvement is particularly significant for based rollups, which rely heavily on Ethereum's L1 for data availability and security. These rollups post their transaction data to Ethereum but face a critical bottleneck in how quickly L1 blocks are produced. By reducing slot time from 12 to 8 seconds, based rollups could reduce their end-to-end finality times by a full 4 seconds per block.

For applications built on these rollups that require fast finality—like high-frequency trading, real-time gaming, or cross-chain operations — this 33% latency reduction represents a substantial competitive advantage.

In essence, EIP-7782 offers a dual improvement: more transactions per minute can be propagated across the network with faster confirmations.

However, reducing slot time does create new challenges for consensus participants. Validators would need to produce and broadcast attestations more frequently, increasing their operational overhead. The compressed timeframe between slots also intensifies what are often called "timing games" – strategic behaviours related to transaction submission, block propagation, and MEV leakage.

For example, block proposers would have less time to gather transactions, construct blocks, and broadcast them. With only 8 seconds per slot (compared to 12), any network delays would have a proportionally larger impact on a proposer's ability to submit their block on time. Sophisticated block builders with optimized infrastructure might gain advantage in MEV extraction under these tighter time constraints.

Distributed validator setups also face particular challenges from reduced slot times. Specifically, Distributed Validator Technology (DVT) implementations - where multiple operators collectively control a single validator - would need to coordinate their operations within tighter timeframes. These systems use threshold signatures that require multiple participants to contribute before reaching consensus on validator actions.

With the slot time reduced from 12 to 8 seconds, DVT setups would have 33% less time to:

- 📨 Communicate proposed attestations between participants

- 🤝 Reach agreement on what to sign

- 📝 Generate and combine partial signatures

- 📤 Submit the final aggregated signature

This compressed timeframe could be very challenging for geographically distributed DVT clusters, where network latency between participants already consumes precious milliseconds. For example, a DVT setup with participants across different continents might struggle to complete all required communication rounds within the shortened 8-second window, potentially increasing missed attestations.

Other validation approaches that rely on additional coordination steps, such as validator setups using advanced anti-MEV strategies or validators participating in external block-building marketplaces (like PBS), would face similar timing pressures.

These coordination challenges highlight an important truth: while EIP-7782's time-based approach might be an elegant solution to bandwidth concerns and provides latency benefits, it introduces complexity for certain validator configurations. This tension between different scaling approaches is precisely why Ethereum's second scaling proposal, EIP-7783, takes a fundamentally different direction.

EIP-7783: The Limit-Based Approach

If EIP-7782 represents scaling through time, then EIP-7783 is scaling through space. Instead of changing how frequently blocks are produced, the proposal follows Ethereum's historical pattern of increasing the gas limit – but with a crucial change that addresses the shortcomings of previous gas limit increases.

EIP-7783 proposes a predetermined, phased schedule for gas limit increases implemented directly at the protocol level. Instead of relying on validator voting or a one-time adjustment, it defines careful a implementation that would gradually increase the gas limit from 15 million to 30 million (or rather from 30 million to 60 million).

The elegance of this approach lies in its predictability and careful pacing. By implementing gas limit increases as a gradual, predetermined schedule, EIP-7783 gives the entire ecosystem time to adapt at each phase.

But what makes it particularly interesting is that it offers multiple implementation strategies, each with different implications for how throughput would grow over time:

Linear Gas Limit Increase Strategy

The simplest approach, the Linear Strategy, would increase the gas limit by a fixed amount with each block.

Defined as:

def linear_increase_gl(blockNum: int, blockNumStart: int, initialGasLimit: int, r: int, gasLimitCap: int)-> int:

if blockNum< blockNumStart:

return initialGasLimit

else:

return min(gasLimitCap, initialGasLimit+ r * (blockNum- blockNumStart))

Where:

blockNumis the block number for which the gas limit is being calculated.blockNumStartis the block number at which the gas limit increase starts.initialGasLimitis the initial gas limit at blockblockNumStart.ris the rate at which the gas limit increases per block.gasLimitCapis the maximum gas limit that can be reached (60M).

This strategy creates a smooth, predictable slope of gas limit increases, where network capacity grows at a constant rate over time. For validators and node operators, this means a gradual, continuous adaptation to slightly larger blocks.

For example, if we set the rate to just 6 gas units per block, the gas limit would increase by approximately 1.5 million over a year – a manageable 10% growth that gives the ecosystem ample time to adapt. Users would experience a steady improvement in transaction throughput and potentially declining fees, but the changes from day to day would be nearly imperceptible.

To clearly illustrate the impact of this approach on the gas limit, some parameter values have been deliberately set to more pronounced levels. Specifically, we use r = 100,000 to emphasize the rate of change.

The advantage of this strategy is its predictability and smoothness – there are no sudden jumps that might destabilize the network. However, this approach offers limited opportunity to pause and evaluate the impact of increases before continuing further.

Stepwise Linear Gas Limit Increase Strategy

The Stepwise Strategy introduces an important modification – instead of increasing with every block, the gas limit would increase by a fixed amount after a specified number of blocks (a "cooldown period").

Defined as:

def stepwise_increase_gl(blockNum: int, blockNumStart: int, r: int, step_blocks_interval: int, initialGasLimit: int, gasLimitCap: int) -> int:

if blockNum < blockNumStart:

return initialGasLimit

else:

return min(gasLimitCap, initialGasLimit + r * ((blockNum - blockNumStart) // step_blocks_interval))

Where:

blockNumis the block number for which the gas limit is being calculated.blockNumStartis the block number at which the gas limit increase starts.initialGasLimitis the initial gas limit at blockblockNumStart.step_blocks_intervalis the number of blocks after which the gas limit increases (cooldown period).ris the rate at which the gas limit increases per step.gasLimitCapis the maximum gas limit that can be reached (60M).

This strategy creates a stair-step pattern of growth, where the gas limit remains stable for a period before jumping to the next level.

For validators, this approach enables distinct adjustment periods. After each increase, they would experience a plateau during which network conditions can stabilize, and any issues can be identified before the next increase. Users would see more noticeable improvements in throughput at each step, rather than continuous, tiny improvements.

The Stepwise approach has three significant advantages:

- It creates clear, measurable phases for gathering performance data

- It allows the community to evaluate each increase before proceeding further

- It provides natural pause points where increases could potentially be halted if problems emerge

To clearly illustrate the impact of this approach on the gas limit, some parameter values have been deliberately set to more pronounced levels. Specifically, we use r = 100,000 and step_blocks_interval= 5 to emphasize the rate of change.

Exponential Gas Limit Increase Strategy

The most aggressive option, the Exponential Strategy, would double the gas limit after a specified number of blocks.

Defined as:

def exponential_increase_gl(blockNum: int, blockNumStart: int, doubling_blocks_interval: int, initialGasLimit: int, gasLimitCap: int) -> int:

if blockNum < blockNumStart:

return initialGasLimit

else:

return min(gasLimitCap, initialGasLimit * (2 ** ((blockNum - blockNumStart) / doubling_blocks_interval)))

Where:

blockNumis the block number for which the gas limit is being calculated.blockNumStartis the block number at which the gas limit increase starts.initialGasLimitis the initial gas limit at blockblockNumStart.doubling_blocks_intervalis the number of blocks after which the gas limit doubles.gasLimitCapis the maximum gas limit that can be reached (60M).

This creates an accelerating curve of gas limit increases, starting slowly but growing more rapidly over time.

For validators, this approach would require more planning for hardware and bandwidth needs as block sizes increase at an accelerating pace. Users would experience modest improvements at first, followed by more dramatic throughput increases as time goes on.

The Exponential Strategy has the potential for more substantial scaling in the long run. It would enable Ethereum to expand capacity more rapidly if chosen, allowing for greater throughput growth compared to the linear approaches.

To clearly illustrate the impact of this approach on the gas limit, the parameter values have been deliberately set to more pronounced levels. Specifically, we use doubling_blocks_interval = 100 to emphasize the rate of change.

Something worth noting is that while these strategies differ significantly in their growth patterns, they share a critical safety feature – the gasLimitCap parameter. Notice how each formula includes min(gasLimitCap, x)? That part of the code ensures that regardless of how aggressive the growth function might be, the gas limit will never exceed a predetermined maximum value. I.e. stops the gas limit form just increasing indefinitely.

Ethereum's Rollup-Centric Roadmap: A Shift in the Scaling Paradigm

After looking at Pectra's technical improvements and the various approaches to increasing Ethereum's throughput capacity, an important question pops up: How might these changes affect Ethereum's broader scaling strategy? For years, Ethereum has followed what Vitalik Buterin and other core developers have called a "rollup-centric roadmap" - basically admitting that L2 solutions would be the main path to scalability, while the base layer focuses on security, decentralization, and data availability.

This division of labor made perfect sense given Ethereum's limitations. With L1 throughput capped, moving transaction execution to L2 rollups let the ecosystem process way more transactions while preserving what makes Ethereum special. Rollups could crunch thousands of transactions off-chain, then just post the compressed results back to Ethereum for security and finality.

The Dencun upgrade in March 2024 marked a huge milestone in this strategy. By introducing blobs – those special data structures designed specifically for rollups – Ethereum slashed data costs for L2s overnight. Blobs gave rollups their own dedicated space that didn't compete with regular transactions, making everything more efficient and cost-effective.

"Blobs are like giving rollups their own dedicated highway, rather than forcing them to share congested city streets with other traffic," as Ethereum researcher Proto explained.

But Pectra's second deployment, with its potential to significantly boost throughput, raises an intriguing question: Are we witnessing a subtle shift in Ethereum's scaling philosophy? If the base layer becomes dramatically more efficient at processing transactions — representing a form of horizontal scaling rather than vertical expansion — might the relationship between L1 and L2s evolve in unexpected ways?

Before diving deeper, it's worth noting that Dencun has already created some surprising ripple effects in the L2 ecosystem. By dramatically cutting data posting costs, it completely transformed the economics of rollups.

After Dencun, rollup operating costs absolutely plummeted, creating eye-popping profit margins for the major rollups. Optimism's margins shot up to between 98-99%, while Arbitrum reached 70-99%, compared to pre-Dencun margins that rarely topped 50%. This sudden economic shift had mixed effects – cheaper fees for users, but less revenue for Ethereum itself as less ETH got burned through EIP-1559.

Optimism and Arbitrum now generate massive revenue streams for their treasuries and token holders, as the gap between what users pay and what rollups spend on Ethereum has widened dramatically. But this leads to a compelling question: If Pectra's second deployment further boosts Ethereum's throughput through gas limit increases or faster slots, could some of this economic activity flow back to L1?

Pectra's throughput improvements might trigger a subtle rebalancing between Ethereum's base layer and its rollup ecosystem. If throughput jumps by 33% through slot time reduction (EIP-7782) or potentially doubles through gas limit increases (EIP-7783), basic supply and demand suggests transaction fees would drop during normal conditions. This could make the base layer more attractive for transactions that don't need absolute rock-bottom fees.

Meanwhile, "based rollups" have emerged as a potential sweet spot between traditional L2s and L1. These rollups tap into Ethereum's validator set for sequencing instead of using centralized sequencers, making them more censorship resistant and allowing a tighter economic alignment with Ethereum.

But a big challenge for based rollups has been latency – with Ethereum's 12-second slot time, they just couldn't compete with the near-instant confirmations offered by centralized sequencers. But EIP-7782's proposed reduction to 8-second slots would significantly narrow this gap. Plus, the emerging concept of "preconfirmations" – where validators provide economic guarantees about transaction inclusion before blocks are produced – could make the user experience even smoother.

(i.e. putting their money where their mouth is and basically guaranteeing a user they’ll include their TX)

Based rollups also offer a promising solution to the fragmentation problem that plagues today's L2 landscape. By sharing Ethereum's validators as a common sequencing layer, they could potentially enable cross-rollup composability that's simply impossible with stand-alone centralized sequencers. This could help restore Ethereum's powerful network effects while maintaining all the scaling advantages rollups bring to the table.

Ethereum's long-term sustainability depends on finding the right balance of value capture between L1 and L2. Based rollups create a more symbiotic relationship by returning sequencing fees and MEV to Ethereum validators, strengthening L1 security while benefiting from rollup scalability. If Pectra's second deployment significantly boosts throughput, this aligned architecture could capture more transaction volume, benefiting both validators and users across the ecosystem.

As we look toward Ethereum's scaling future, we might be witnessing what biologists call "convergent evolution" – different mechanisms independently evolving similar solutions to common problems. Ethereum L1 is becoming more efficient and less expensive through upgrades like Pectra, while rollups are becoming more decentralized and aligned with Ethereum through models like based sequencing.

So in this converging landscape, users might choose between base Ethereum for maximum security and composability, traditional rollups for ultra-low fees and specialized execution, or based rollups for that perfect balance of scalability, security, and economic alignment.

But there are still some important questions to be asked: Will increased L1 throughput drive a significant return of activity to the base layer? Can based rollups with preconfirmations deliver the user experience needed to compete with centralized sequencers? How will sequencing fees and MEV distribute between validators and L2 operators? Will the efficiency gains from shared sequencing outweigh the coordination challenges it creates?

The answers to these questions will shape Ethereum's scaling story. What's becoming clear is that the old L1 vs. L2 dichotomy is evolving into more of a complementary relationship.

Realistically, Pectra's second deployment is more than just throughput improvements – it signals a fundamental shift in scaling philosophy. Rather than simply pushing activity outward, Ethereum is finally strengthening the base layer while building bridges between layers.

With base layer improvements and innovations like based rollups, it’s clear that the future isn't about choosing between layers, but about finding the right economic and decentralization balance, while allowing users to choose their preferred weight of both.

The Pectra Fork: Ethereum’s Scaling Hail Mary

Ethereum has come a long way since its early days. Now, the Pectra fork promises Ethereum's most substantial throughput upgrade in four years, with technical improvements that could redefine the relationship between L1s and L2s, and challenge the rollup-centric scaling philosophy.

Back in 2013, four years after the launch of Bitcoin, a 19-year-old college dropout authored a whitepaper that would redefine blockchain technology forever.

Frustrated by Bitcoin's limited scope as merely a peer-to-peer cash system, the University of Waterloo dropout introduced a groundbreaking concept in his whitepaper, 'Ethereum: A Next-Generation Smart Contract and Decentralized Application Platform.' He proposed a blockchain capable of executing programmable agreements, envisioning a network far more versatile than Bitcoin’s initial design. His idea was an open platform that could do more than just transfer digital coins between addresses—it was a foundation for decentralized applications, governed by 'smart contracts'.

That college dropout was Vitalik Buterin, and shortly after the release of the white paper, along with Gavin Wood, Charles Hoskinson, Anthony Di Iorio, and Joseph Lubin, his vision ultimately led to the creation and development of Ethereum in 2015.

In the following years, Ethereum experienced significant growth, both in its user base and technological advancements… Even the platform's native currency, Ether (ETH), saw its value rise from under $1 in 2015 to over $10 by March 2016.

As the network expanded, the Ethereum community realized they needed a reliable and transparent way to propose and implement changes to the network. This realization led to the creation of the Ethereum Improvement Proposals (EIPs).

Modeled after Bitcoin's BIP process and Python's PEP system, EIPs became the “language of progress” for Ethereum. It allowed developers around the globe to easily collaborate on innovation.

The first of these proposals, EIP-1 was a manifesto, created in 2015, and it established the framework for proposing and implementing changes within the network.

As Hudson Jameson, a core developer liaison at the Ethereum Foundation, put it: “EIP-1 will be the constitution of this new nation… Not etched in stone, but written in collaborative code.”

Shortly after the first EIP, came one of the most important EIPs in the network's history, EIP-20.

This proposal introduced the ERC-20 token standard that we all know and love. It enabled developers to create interoperable tokens on Ethereum overnight. And suddenly, blockchain wasn’t just about money — it became a playground for decentralized finance (DeFi), digital art (NFTs), and DAOs. Even though the EIP-20 unlocked Ethereum's creative potential, the network faced its first existential crisis just months later.

In June 2016, an attacker exploited a vulnerability in "The DAO", a $150 million decentralized venture fund built on Ethereum−draining 3.6 million ETH (worth $60M at the time). The community faced an impossible choice: preserve the "code is law" ethos and let the theft stand, or override the blockchain's history to recover the funds.

This sparked Ethereum's first hard fork in July of 2016. Through heated debates on Reddit and developer forums, 85% of miners voted to implement EIP-779, reversing the hack by invalidating the attacker's transactions. The minority who rejected this change continued operating the original chain as Ethereum Classic (ETC), creating a permanent chain split.

What made this intervention possible was a unique circumstance: The DAO's code had a 30-day delay before stolen funds could be withdrawn, giving developers a critical window to act. The Ethereum ecosystem was also much smaller and less interconnected back then. When Bybit exchange lost $1.4B in ETH to hackers in February of 2025, rollback calls predictably surfaced again, but the landscape had fundamentally changed. With DeFi protocols, NFT markets, and cross-chain bridges now deeply intertwined, hackers could instantly scatter stolen funds across the ecosystem, making a surgical intervention impossible without massive collateral damage.

The Hard Fork Meta

Definition:

- The “difficulty bomb” is a mechanism inside Ethereum’s PoW designed to make mining progressively harder as time passed to force miners to eventually switch to PoS.

- zk-SNARKs are an early form of zero-knowledge cryptography that enable privacy-enhancing transactions.

- In the context of Ethereum upgrades, a "fork" is a protocol change that creates a divergence from the previous blockchain version, with hard forks requiring all nodes to update software to maintain network participation while soft forks remain backward-compatible. Named upgrade forks (like "Shanghai" or "Dencun") represent scheduled implementations of multiple EIPs that collectively advance Ethereum's capabilities.

Even though this unfortunate event created a philosophical split between the members of the community, the Ethereum network gained something undeniably more important, “the hard fork meta”.

In nature, genetic mutations sometimes create new species - Blockchains do this through forks.

Back in 2016 when miners split Ethereum into two chains — one where the DAO hack was reversed (ETH) and one where it wasn’t (ETC) — they revealed something profound: Forks are blockchain’s mutation mechanism. Forks allow networks to adapt when their environment changes, and throughout its lifetime, Ethereum has had quite a few of these mutations ever since.

Following the DAO Fork, Ethereum’s next major “mutation” came with the Byzantium upgrade.Byzantium was part of the broader Metropolis roadmap and it introduced significant improvements like zk-SNARKs and paved the way for the transition to Proof of Stake (PoS) by reducing block rewards and delaying the “difficulty bomb”.

After the Byzantium update, Ethereum introduced the Constantinople in 2019, reducing gas costs for certain operations and further postponed the difficulty bomb, signaling that Ethereum was gearing up for a significant transformation.

By 2021 Ethereum had already grown into a multi-billion-dollar ecosystem, but one major issue still remained - gas fees. Ethereum‘s transaction fee model was unpredictable and often led to users overpaying for transactions.

Enter EIP-1559, the most famous proposal of the London hard fork where it introduced a base fee burn mechanism, permanently removing a portion of ETH from circulation with every transaction. Suddenly, Ethereum had a theoretic deflationary pressure whenever network activity surged, eyeing a supply reduction over time..

This wasn’t just a technical upgrade, it changed Ethereum’s economic model forever. ETH was no longer just an inflationary asset, now, at times of high demand, it could become “ultrasound money”.

But all these forks were just stepping stones to Ethereum’s most ambitious transformation yet: The Merge. For years, Ethereum had planned to transition from Proof-of-Work (PoW) to Proof-of-Stake (PoS) but the process was long and complex.

On September 15th, 2022, after many years of deep research, testing, and delays, Ethereum finally merged its Execution Layer (EL) with the Beacon Chain, a PoS network running in parallel since 2020. This upgrade recuded Ethereum's energy consumption by 99.95% overnight by altering its consensus mechanism.

Ethereum transitioned from relying on miners competing to secure the network to a capital-based security model, where validators staked ETH to participate in consensus. This shift marked a significant evolution in the hard fork methodology, moving beyond mere bug fixes and optimizations. It had now become a means to redefine the very essence of Ethereum.

Ethereum’s Biggest Hard Fork: Pectra

Definition:

- Precompiles in Ethereum are optimized, built-in smart contract functions that handle computationally expensive operations more efficiently than executing them in Solidity or the EVM’s general execution layer. Instead of using standard EVM opcodes, which can be inefficient and gas-intensive, precompiles provide direct access to optimized implementations written in low-level code.

- Opcodes in Ethereum are low-level machine instructions executed by the Ethereum Virtual Machine (EVM). They define the fundamental operations of smart contracts, such as arithmetic (+, -), storage access, and contract calls. Each opcode has a specific gas cost and operates on the EVM stack.

Pectra, named after a blend of Prague and Electra, is the next major upgrade scheduled for its initial deployment in May 2025. Defined under EIP-7702, due to the sheer number of EIPs included, developers were compelled to split the upgrade into two parts.

Unlike previous hardforks that typically focused on a few major changes, the first deployment of the two-part Pectra upgrade includes 11 EIPs that address a wide range of enhancements, from blob optimizations to staking improvements and user experience upgrades. While the total number of EIPs in the full Pectra upgrade (including the second deployment) is still undetermined, we can explore the EIPs included in the first deployment:

EIP-2537 - Precompile for BLS12-381 curve operations:

Defined as: \( y^2 = x^3 + 4 \), the BLS12-381 curve is an elliptic mathematical curve used for pairing-based operations in cryptography.

To put it simply, the BLS12-381 curve plays a key role in signature verification (BLS signature schemes and zk-SNARKs) used for Ethereum’s Proof-of-Stake.

However, as it currently stands, the Ethereum Virtual Machine (EVM) does not have precompiles for operations on the BLS12-381 curve, making any operations on the curve way more inefficient and expensive than they need to be.

Precompiles provide direct access to efficient low-level code — not having a precompile for such a core element of PoS is like doing taxes, every single day, WITHOUT a calculator! The addition of precompiles for the BLS curve will remove any unnecessary computational and gas overhead for its operations.

EIP-2935 - Save historical block hashes in state:

Currently, Ethereum’s BLOCKHASH opcode only allows retrieving block hashes from the last 256 blocks. If a smart contract needs an older block hash, it has to rely on external data sources (like off-chain oracles or archival nodes), which is inefficient and introduces trust dependencies.

By storing historical block hashes of the last 8192 blocks, EIP-2935 makes this data available on-chain, improving transparency, security, and usability for smart contracts.This also means:

- Improved Trustless Light Clients - Light clients rely on block headers to verify chain integrity. By making historical hashes accessible on-chain, light clients can operate more trustlessly without needing full nodes or third-party services.

- Improved Fraud Proofs - Many layer 2 rollups (especially optimistic rollups) use fraud proofs, which require referencing older block hashes for verification. Currently, rollups must depend on full nodes or external data providers to fetch past block hashes. Storing them on-chain removes this external dependency and improves rollup security.

EIP-6110 - Supply validator deposits on-chain:

Ethereum’s PoS system has a gap between its two main components:

- Execution Layer (EL) - Ethereum as we know it, where transactions and smart contracts run.

- Consensus Layer (CL) - Beacon Chain, which manages staking and validator consensus.

Currently, Ethereum’s EL doesn’t store validator deposits, instead, deposits are processed off-chain by the Consensus Layer, which means new validators must rely on external sources (such as full CL nodes or third-party APIs) to confirm their deposit status.

This creates inefficiencies:

- Slower Validator Bootstrapping – New validators must fetch deposit data externally, adding trust dependencies.

- Centralization Risks – Stakers often rely on third-party services to confirm deposits.

- Limited Smart Contract Access – Contracts and rollups can’t verify deposits natively, requiring external oracles.

EIP-6110 fixes these inefficiencies by storing validator deposits on-chain, making staking faster and trustless while reducing off-chain dependencies.

EIP-7002 - Execution layer triggerable exits:

Currently, validator exits are handled exclusively by the Consensus Layer, meaning validators must rely on their own nodes or a third-party service to submit exit requests.

This creates:

- Delays & Complexity – Validators must interact with the CL, which isn’t designed for easy user interaction.

- Trust Dependencies – Validators without direct access to a CL node must rely on external services (centralized APIs, staking providers) to submit exit requests.

EIP-7002 allows validators to trigger their own exits directly from the Execution Layer instead of relying solely on the Consensus Layer. This means a validator can submit an on-chain transaction to initiate their exit rather than waiting for an external Beacon node to process the request.

EIP-7251 - Increase the MAX_EFFECTIVE_BALANCE:

Today, the MAX_EFFECTIVE_BALANCE is fixed at 32 ETH, forcing every validator — even if ran by a single operator — to be limited to that amount.

This constraint leads to a high number of validators under one operator, i.e., to utilize as much of their ETH as possible, one operator is forced to run multiple validators because of the cap, making many of these single-operator-run validators:

- “Redundant” from the perspective of the operator

- And bad for the network, as more validators increase network overhead and complexity.

To solve these redundancy and complexity issues, EIP-7251 raises the cap on how much a validator’s effective balance can be, allowing a single validator to control more than 32 ETH (up to a new limit, e.g., 2048 ETH in this proposal).

It also introduces a new constant, the MIN_ACTIVATION_BALANCE, which remains at 32 ETH to ensure solo-stakers can still participate without consolidation.

The EIP will:

- Allow large node operators who currently must run many separate validators (each capped at 32 ETH) to consolidate their stake into fewer validators.

- (With less validators) Reduce the overall P2P communication overhead, lower the amount of data processed (such as fewer BLS signature aggregations), and decrease the BeaconState memory footprint.

- Benefit smaller stakers too—if they have, say, 40 ETH, they don’t have to wait until they accumulate 64 ETH to run two validators. They can run a single validator with a balance above the minimum.

EIP-7549 - Move committee index outside Attestation:

Currently, Ethereum's attestation data structure has a logical inconsistency: the committee_index field (which identifies which validator committee the attestation belongs to) sits directly in the Attestation object alongside aggregation_bits, data (the AttestationData object), and signature, despite being a critical part of what validators are signing.

This creates complications:

- Aggregation Complexity – Attestations can only be aggregated if they have matching committee_index values, requiring extra verification steps.

- Unintuitive Structure – The field is part of what validators sign but isn't included in the signed data structure.

EIP-7549 moves the committee_index field into the AttestationData object where it logically belongs. This simple structural change:

- Simplifies Aggregation – Attestations can be aggregated if they have the same data (which now includes committee_index).

- Improves Data Organization – The field is now properly part of the signed data that validators attest to.

While the change is minor, it creates a cleaner, more consistent data structure that better aligns with how attestations are actually used in the protocol, making everything straightforward.

EIP-7623 - Increase calldata cost:

Currently, calldata costs are relatively low, which allows for larger block sizes when transactions include substantial amounts of calldata. This can lead to significant variability in block sizes, with potential maximums reaching up to 7.15 MB (average block sizes are around 100 KB).

EIP-7623 will increase the gas cost associated with calldata, particularly for transactions that predominantly post data which will:

- Reduce the maximum block size and,

- Block size variability, improving network efficiency and stability.

EIP-7685 - General purpose execution layer requests:

Right now, communication between Ethereum’s EL and CL is rigid and specialized:

- The EL is responsible for processing transactions and building blocks,

- While the CL handles validator consensus and finalization.

When the EL needs the CL to perform an action — like validator exits or withdrawals — it relies on separate predefined mechanisms for each type of request. These operations often involve dedicated transaction types, indirect signaling methods, or extra processing steps. This fragmentation makes the system more complex, harder to upgrade, and less flexible for future extensions.

EIP-7685 introduces a uniform format for EL-to-CL messages with each request including:

- A type prefix (a new field, REQUESTS_HASH, in the block header defining what kind of operation it is),

- and an associated payload

Instead of hardcoding separate mechanisms for different features (like validator exits, partial withdrawals, or consolidation), this EIP provides one unified system. It enables the EL to embed these requests in block bodies, which the CL later processes as part of its state transition.

This way similar requests can be aggregated and processed together reducing overhead and simplifying validation, which can lead to faster, more resource-efficient block processing.

.png)

EIP-7691 - Blob throughput increase:

As we all know, Ethereum’s scalability relies on Layer 2 (L2) solutions, like rollups, which compress and batch transactions before submitting them to the main chain.

These rollups depend on Ethereum’s data availability to store compressed transaction data efficiently and use blobs (specific data structures) to post large batches of transaction data more cost-effectively.

Currently, the protocol targets a relatively modest number of blobs per block (for example, a target of 3 and a maximum of 6). EIP-7691 will raise these limits (e.g., increasing the target to 6 and the maximum to 9 blobs per block).

With more blobs per block, the network can handle higher data loads, which reduces congestion and leads to smoother, more predictable performance even during periods of high demand.

Additionally, the improved data throughput enables rollups to operate more cost-effectively, which ultimately lowers transaction fees for users.

EIP-7702 - Set EOA account code:

We’ve all heard of the much-awaited Account Abstraction — “EOAs that can do more than just initiate transactions” — it’s a topic that we’ve already discussed in detail, but to put it simply, Ethereum has two distinct account types that cannot overlap:

- Externally Owned Accounts (EOAs) - Controlled by private keys but cannot contain code

- Contract Accounts - Contain executable code but have no associated private keys

This rigid division creates inefficiencies like:

- 🖥️ Limited Functionality – EOAs can only initiate basic transactions, lacking programmable features

- 🤔 Complex Architectures – Users needing programmability must deploy separate contract wallets or proxy contracts

- 📈 Higher Gas Costs – Deploying and managing these extra contracts consumes unnecessary gas

By introducing a new SETCODE opcode, allowing EOAs to set their own code while maintaining private key control, EIP-7702 marks the first real step towards full Account Abstraction on Ethereum Mainnet.

This change will blend the control of private keys with the programmability of smart contracts in a single account while reducing gas costs (no separate contract deployments needed), introducing cleaner security models, and preserving address identity for users.

EIP-7840 - Add blob schedule to EL config files:

Currently, whenever Ethereum needs to adjust blob-related parameters (like how many blobs can fit in a block), it requires a hard fork of the entire network.

This means all node operators must coordinate to update their software simultaneously, which is a slow, complex process.

It’s like needing city council approval every time you want to adjust the number of buses running on a route.